While pioneering firms strive to reduce risk and increase productivity by embracing virtual design and construction, the vendors enabling VDC struggle to anticipate user needs and differentiate themselves from their competition without losing customers in swamps of technological confusion.

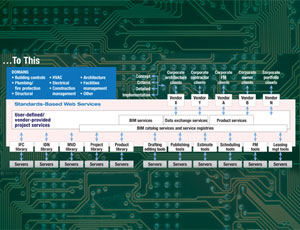

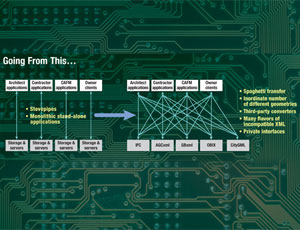

There are myriad products addressing discrete aspects of virtual design and construction. Most work independently and share their results with project planners by merging discipline-specific files in sophisticated viewers. Although one vision of the ultimate building information model includes access to all the data and operations from a single interface, developers are not there yet, and some say that may not even be such a good idea. For now, the focus is on performance, constructibility and data interoperability.

“We made some really significant progress,” says Phil Bernstein, vice president and head of the building industry division at San Rafael, Calif.-based Autodesk. “The transition from a theoretical asset to a viable tool has been made, and the question in the industry has changed from ‘Is it a good idea?’ to ‘How [do you]make things happen?’ Our users are now coming to us and saying, ‘I am into this, and I would like you to make the following specific improvements.’”

“There is a lot of stuff happening,” says Bhupinder Singh, senior vice president of Bentley Software at Bentley Systems Inc., Exton, Pa. “The industry is beginning to talk about performance and constructibility....There was an inordinate amount of effort on visualization in the beginning, but now it is pretty substantially shifted to how it performs and how constructible it is. It shifts the focus and the playing field.”

Discipline-specific modeling tools capture ideas, refine geometries, calculate loads, design connections, estimate, schedule, phase, plumb, wire, illuminate, heat, cool and code-check imaginary structures. The potential is to analyze, maximize and debug projects as much as possible before turning dirt.

Coordinated Management

One vision of building information modeling is that if merged, those models can fully define the project and empower coordinated management of all phases and disciplines over the lifecycle of the facility. But for the vendors, providing the required interoperability for all that data is tough. Their approaches for doing it gets to some of the fundamental differences between them.

“The conceptual problem here is the rational relation between models operating at different scales with different representative schema, different business processes, across different discipline-views of a project,” says Bernstein. “This is a problem...the problem of discontinuous levels of abstraction. Designs don’t unfold in lockstep at the same level of details. That doesn’t map to the way people actually work. You need relationships between models that make sense.”

Autodesk’s flagship building-information-modeling design product, Revit, originally approached modeling by collecting building information into a single data set. But that approach is morphing as the Revit platform expands to address users’ desire to work in heterogeneous planning environments incorporating an array of software products and models from multiple disciplines. Bernstein says the growing suite of related products inside and outside of Autodesk’s portfolio, as well as those within the Revit family, including Revit Architectural, MEP and Structural, now are using data-management schemes that are adaptable to the way project teams choose to work. “If you choose to keep data separate and link with a work process, you are free to do so,” he says. “As adoption rates go up, you tend to see different arrangements. Sometimes they are heavily locked together and sometimes completely separate.”

Bentley’s Singh says Bentley has been there all along, and its most recent product-suite release, know as V8i, is focused on collaboration across disparate products, operations, disciplines and geographies. “It was clear to us from the beginning that there were going to be lots and lots of tools in our users’ workflows that we could not hope to replace...Autodesk tools, special analysis tools....Our users have their own multidisciplinary supply chains and geographic environments...that’s the fundamental realization we bring to the table,” Singh says. “We have always viewed BIM as a process and modeling, as opposed to ‘the model.’”

Potential Chaos

But Singh admits there is potential for chaos in the “federated model” if the data-organizing, file-linking and transfer process is manual and subject to unintended, or “lossy” changes. “Manual and lossy are two huge pain points,” he says. “The challenge is how to make it automatic and as non-lossy as we can make it, even with tools we don’t own. That’s what brings me to work every day.”

BUSINESS DEVELOPMENT DIRECTOR, OGC

Bentley and Autodesk are wrestling with the desire of many users to run analysis programs within design software so less productive alternatives can be culled in real time as schemes develop. In most cases today, however, analysis is performed in separate programs by exporting designs for evaluation, whose results are considered in subsequent design sessions.

For the most part, concurrent analysis within design software is impractical either because the taxonomy of analysis tools and design tools are quite different or the processing demands of analysis are so intense that combining functions would overload desktop systems, Bernstein says. He believes users are better served if analysis tools run separately or are accessed over the Web, the way Autodesk’s Green Building Studio energy-analysis tool works.

“Green Building Studio needs to be run on something much heavier and faster. Users are better off not having to park all that computing power on their desktop,” Bernstein says. In those cases, “the physical and analytical models are never co-located. The mathematical models are always separate from the other, and you are always going to have the sort of federated collection of data. What we are all striving to get is a datacentric mind-set and appreciation of how we can bring it together for various degrees of specificity of details and use,” he says.

“The design authoring tool is good at what it is good at, which is making the design, and the analytical tool does what it is good at, which is reasoning about the design,” Bernstein adds.

Bentley’s Singh says Bentley’s approach ties everything together in its ProjectWise Navigator collaboration system. “From 2D drafting to higher semantic representation, we have always considered that we had multiple models,” he says. “To us, ProjectWise is the key element of the interoperability.”

A key collaboration tool for Autodesk is NavisWorks, which Bernstein describes as a philosophical analysis framework. “You are going to use this tool to mediate and understand the relationships between the parts and go back to the originator to make the changes,” he says.

Even though many professionals prefer to use third-party analysis tools that they trust and are accustomed to, there still is a stubborn grumble spanning the industry that says technology should permit any model and its data to be accessed effortlessly by any other software on the job or by the facility’s operators in the future, regardless of the authoring platform or vendor. Users and owners want data interoperability.

First Blush

By some definitions, the first blush of interoperability is here. Aggregate model viewers and conflict-resolution tools like Autodesk’s NavisWorks, Bentley’s ProjectWise Navigator, Vico Constructor, ArchiCAD 12’s Virtual Building Explorer and Tekla Structures combine various- format model files that have been created by an array of discipline-specific tools. That enables reconciliation of designs and virtual assembly sequences and improves construction planning. While that is a powerful start, it falls well short of true interoperability for most visionaries.

SENIOR VICE PRESIDENT,

BENTLEY SYSTEMS INC., EXTON, PA.

The issue is a key one for the Open Geospatial Consortium, a consensus standards-setting group of 377 companies and agencies that delivered the OpenGIS Specifications that have “geo-enabled” the Web and wireless and location-based services so they translate automatically from one application to another. OGC now is working with the National Institute of Building Sciences to improve construction-industry data exchange and Web-enabled BIM interoperability the same way.

According to Hecht, OGC defines interoperability as “the ability of two or more autonomous, heterogeneous, distributed digital entities (e.g., systems, applications, procedures, directories, inventories or data sets) to communicate and cooperate among themselves despite differences in language, context, format or content. These entities should be able to interact with one another in meaningful ways without special effort by the user the data producer or consumer be it human or machine.”

OGC is working with NIBS’s buildingSMARTalliance (formally the North American Chapter of the International Alliance for Interoperability) and its National Building Information Model Standard (NBIMS) project committee to bring this level of BIM interoperability to construction. It seeks to do it by building on IAI’s Industry Foundation Classes, which were created over the past decade as a library of definitions and relationships for construction objects that software developers can use in their products so that they can share building-component data with others.

OGC is trying to apply the same approach it used to convince geospatial software developers to incorporate standard- application programming interfaces to allow their data to flow. It hopes to convince construction design and analysis software developers to integrate IFC compatibility into their software programs in a similar way.

“It won’t affect the vendors’ ‘black box,’ but it will expose each of their black boxes to public interfaces,” says Louis Hecht Jr., OGC’s business development director who is spearheading the effort. He says OGC’s experience says there is strength in numbers, and the construction organizations supporting interoperability promise to provide that muscle.

“You are only going to get that [influence] when there are enough individuals as a collective to tell the vendor that’s what we want,” Hecht says. “I am here to tell you this is the approach that will end up being the one that works.”

Intersections

OGC is “doing a great job in certain areas defining standard protocols by which an applications can talk to a map server to serve up maps,” says Singh. “OGC went down a path of protocols and interfaces. The philosophy is right. The more you look at interfaces, the better off you are. Rather than seek the union of all business needs, it is the intersection of them. I think that is the only way to go. You have to define the problem as the intersection, and then you have a chance of solving it.”

NBIMS is improving its administrative structure to process, publish and maintain building-information-modeling standards, expanding on the work of the U.S. National CAD Standard, the Facility Maintenance and Operations Committee’s Construction Operations Building Information Exchange (COBIE) initiative, and AGCxml, a cooperative project it has with the Associated General Contractors of America

VICE PRESIDENT, AUTODESK, SAN RAFAEL, CALIF.

More immediately, work on improving compatibility between Bentley and Autodesk files and applications continues as a result of an agreement last July between the two companies to exchange software object libraries and support each other’s application programming-interface tools. The “net” of the agreement will allow Bentley to accelerate-to-market DWG file-format and application-level compatibility, says Singh. Bentley is not privy to the necessary information until each new version of Autodesk’s products is commercially released, but once it is out, the process can then accelerate, he adds. “We are shooting to get it out in a [matter] of months. In the past, it would be a [matter] of quarters,” he says.

Autodesk engineers are “slugging away at it” and are making progress on implementation of the agreement, says Bernstein. “We are working on it,” he adds, citing Securities and Exchange Commission restrictions on further comment. He says he doubts exchange capability will ever be a finished project if “Bentley is as diligent about improving their data structure as we are and we continue to improve. The most important thing is, we have established this relationship.”

Singh cautions, “BIM is a lot more than the file-format compatibility: It removes friction and irritant, but its not the panacea.”

Feedback

The key to progress for any software developer is listening to feedback from customers, say both Bentley and Autodesk, and they both claim to pay close attention, although their customer relationships are different. Autodesk sells through a network of resellers; Bentley sells direct. “We continuously have a channel between us and our users,” says Singh. Feedback comes in exchanges between developers and users on Bentley’s online users’ group, which he says Bentley principals monitor daily; formal technical support requests; and personal contacts between customers and account managers.

“It gives us a sense at an ambient level of what’s going on,” says Singh. “It’s in our DNA as a company. We periodically survey all the issues and categorize them into different buckets.” One bucket is a change requested or enhancment to a feature, another is user error, and the last is “flat-out bugs.” Singh says the company addresses big issues with four-week or six-week development sprints and reprioritizes issues in the engineering development process. “Maybe I am wearing somewhat rose-colored glasses, but that part of our business works pretty well. It feeds back into training, the next bug issue or our product-feature set.”

Bernstein says the questions his network people, resellers and technical specialists say they are starting to get most often are questions about the process implications of BIM, as opposed to questions about menus and dialogs. “We find ourselves spending a lot of time talking about processes, and we don’t mention product. It is all about how technology is changing practice,” he says.

Post a comment to this article

Report Abusive Comment